Prediction and Explanation of Privacy Risk on Mobility Data with Neural Networks

Exploratory: Social Impact of AI and Explainable ML

One of the requirements of the GDPR is privacy risk assessment, i.e., the task of assessing the privacy risk of each individual in a dataset. In the literature, the PRUDEnce [1] framework assesses the privacy risk by simulating privacy attacks, in which the attacker has some background knowledge information that may simplify achieving their goal. Mathematically speaking, the privacy risk value of an individual corresponds to the probability of being re-identified, depending on the background knowledge information the adversary has. To achieve an exhaustive evaluation, we have to consider all the different background knowledge configurations the adversary may have. This process is combinatorial and hence expensive and time-consuming.

For this reason, we propose a new approach that uses Recurrent Neural Networks to predict the privacy risk for human mobility data [5], e.g., trajectories [2]. Trajectories are typically long, so we select the Long Short Term Memory neural networks [3]. In this way, we simply feed the LSTM model with the raw trajectory to predict the risk of privacy breaches.

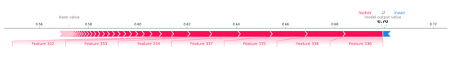

The results found show a good performance overall. In particular, the models have a conservative behaviour: they are good at predicting the high privacy risk class, that is the one that, if wrongly predicted, would have put people's privacy in danger. Unfortunately, a great limitation of machine learning models, and in particular neural networks, is that the internal reasoning of the model is obscure and difficult to understand. However, in the context of data privacy, it is important to provide the end-user with explanations about the reasons that lead them to have that particular privacy risk value. For this reason, our approach proposes to use explainable AI methods to provide a local explanation to the end-user. We use SHAP [4], an agnostic explanator, that works with any kind of inputs and gives as outputs feature importance. Moreover, it can provide local and global explanations. For our setting, we were interested in local explanations to give to the end-user. We applied the DeepExplainer of SHAP, that is an algorithm based on DeepLIFT, able to work with any machine learning algorithm. In Figure 1 and 2, we report two explanations obtained from the application of SHAP to our records: red points are pushing the output value higher, while the blu ones are pushing it lower.

To analyze the results obtained, we compared the output of SHAP with the frequency of the visits of the locations to see if there is a correlation with the classes. In particular, our analysis compared, for each user, the top 3 most important locations for SHAP with the top 3 most frequently visited locations: we called this measure relative frequency. The results highlighted that there is a correlation between the classes and the relative frequency metric: for the low risk class, the relative frequency is high, meaning that on average the most important locations for SHAP are also the most frequently visited locations of the user. This is an important result, since it is telling us that people with a low privacy risk are identified by frequently visited places. On the contrary, users in the high risk class have a low relative frequency value, highlighting the fact that the most important locations, in this case, are not frequently visited.

References

[1] Pratesi, F., Monreale, A., Trasarti, R., Giannotti, F., Pedreschi, D., Yanagihara, T.: Prudence: a system for assessing privacy risk vs utility in data sharing ecosystems.

Transactions on Data Privacy 11(2), 139–167 (2018)

[2] Naretto F., Pellungrini R., Nardini F.M., Giannotti F.: Prediction and Explanation of Privacy Risk on Mobility Data with Neural Networks, XKDD 2020

[3] Hochreiter, S., Schmidhuber, J.: Long short-term memory. Neural Comput. 9(8), 1735–1780 (1997)

[4] Lundberg, S.M., Lee, S.I.: A unified approach to interpreting model predictions. In: NIPS, pp. 4765–4774 (2017)

[5] Pellungrini, R., Pappalardo, L., Pratesi, F., Monreale, A., A data mining approach to assess privacy risk in human mobility data, ACM Transactions on Intelligent Systems and Technology, 2017 Article No.: 31 https://doi.org/10.1145/3106774

Written by: Francesca Naretto

Revised by: Luca Pappalardo