Reducing Exposure to Harmful Content in Recommender Systems

The recommender systems employed by digital media platforms typically showcase content that is similar to the content a user has already consumed. When that content is biased, this runs the risk of fuelling radicalization. One option to mitigate the radicalization risks posed by digital media platforms is to modify their recommendations. Motivated by empirical findings [3], recent work in the intersection of algorithm design and computational social science has made first strides toward formalizing this scenario as an optimization problem under budget constraints [1,2]. However, the resulting models, objective functions, and algorithms have a number of theoretical and practical drawbacks.

Our ongoing project, which was kickstarted by the author’s SoBigData++ TNA visit at KTH Stockholm, seeks to eliminate some of these limitations. It strives to provide a more realistic model and objective function for the recommendation rewiring problem, along with an efficient optimization algorithm to address the problem under the new model. Thus far, we have developed a more natural formulation of the recommendation rewiring problem, capturing user behavior and anti-radicalization rewiring objectives more accurately than previous proposals. We have analyzed our model theoretically and proved that solving the resulting optimization problem is computationally hard in most settings (just like the less natural problem studied in [1]). We have started experiments with competing methods under our new model, and we are currently developing an efficient algorithm to optimize our new objective function.

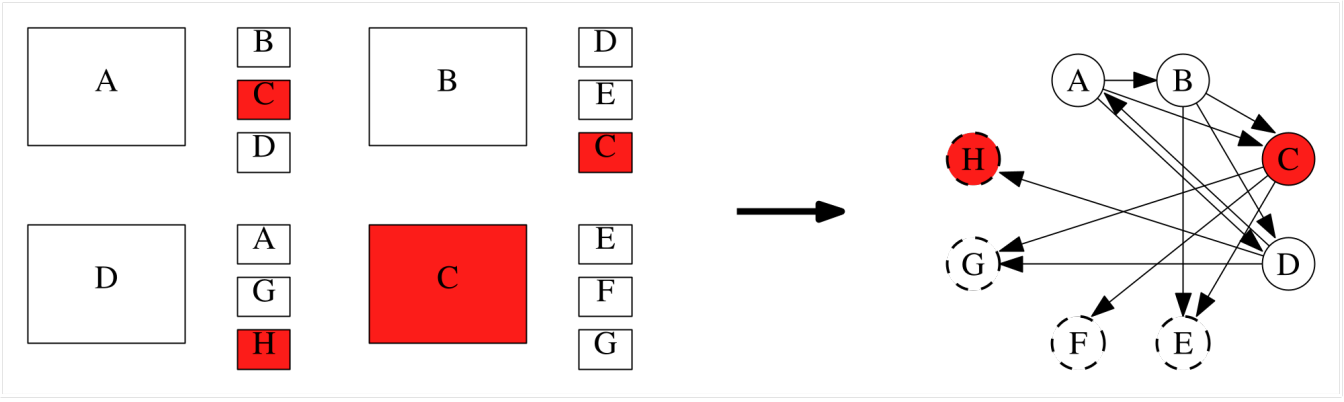

Like inspirational prior work [1], we model a digital media platform as a directed graph in which nodes represent content items, directed edges represent recommendations, and each content item is assigned a label indicating whether it is considered harmful (Figure 1). We also focus on edge rewiring as our main graph operation to improve our objective function, which intuitively corresponds to replacing a recommended item by another one (e.g., when watching YouTube video A, the user now gets recommended video C, rather than video B). However, we model users more realistically than prior work, and our optimization objective assesses the reachability of harmful content much more holistically.

Figure 1: Modeling a digital media platform with some content labeled “harmful” as a directed graph with differently colored nodes. Dashed lines around a node indicate that its outgoing edges are not shown in the figure.

While the formalizations of the rewiring problem studied in past work were relatively easy to analyze but unnatural, more natural formalizations are harder to analyze, and consequently, harder to tackle with algorithms that also provide guarantees. As the potential of recommendation rewiring to reduce harmful content exposure on digital media platforms heavily depends on the chosen formalization, more work developing analytical techniques and approximation algorithms for such formalizations is desirable. In our own work, completing the project started during the author’s SoBigData++ TNA, we hope to provide a theoretically sound and scalable approximation algorithm for the most natural formalization of the rewiring problem studied to date. Beyond this immediate next step, we see two promising directions for future work. First, on the theoretical side, we currently lack a comprehensive overview of hardness results for the rewiring problem and its variants, which would be very useful for algorithm design. Second, on the applied side, a framework supporting the development of theoretically sound approximation algorithms for variants of the rewiring problem would be highly valuable in practice.

Report from a TNA experience at KTH Stockholm

Written by: Corinna Coupette | Max Planck Institute for Informatics

References

[1] Fabbri, Francesco, Yanhao Wang, Francesco Bonchi, Carlos Castillo, and Michael Mathioudakis. "Rewiring What-to-Watch-Next Recommendations to Reduce Radicalization Pathways." In Proceedings of the ACM Web Conference 2022, pp. 2719-2728. 2022.

[2] Haddadan, Shahrzad, Cristina Menghini, Matteo Riondato, and Eli Upfal. "Repbublik: Reducing polarized bubble radius with link insertions." In Proceedings of the 14th ACM International Conference on Web Search and Data Mining, pp. 139-147. 2021.

[3] Ribeiro, Manoel Horta, Raphael Ottoni, Robert West, Virgílio AF Almeida, and Wagner Meira Jr. "Auditing radicalization pathways on YouTube." In Proceedings of the 2020 conference on fairness, accountability, and transparency, pp. 131-141. 2020.