Detecting Misinformation on YouTube Videos

Authors: Shakshi Sharma (University of Tartu, Estonia) Rajesh Sharma (University of Tartu, Estonia)

In present times, Online Social Media (OSM) platforms such as Facebook, YouTube, and Twitter, have been used by billions of individuals for establishing a narrative, conducting propaganda, and disseminating misinformation.

According to recent research, YouTube, the largest video-sharing platform with a user base of more than two billion users, is commonly utilized to disseminate misinformation and hateful videos. According to a survey, 74% of adults in the USA use YouTube, and approximately 500 hours of videos are uploaded to this platform every minute, which makes YouTube hard to monitor. Thus, this makes YouTube an excellent forum for injecting misinformation videos, which could be difficult to detect among the avalanche of content. This has generated severe issues, necessitating creative ways to prevent the spread of misinformation on this platform.

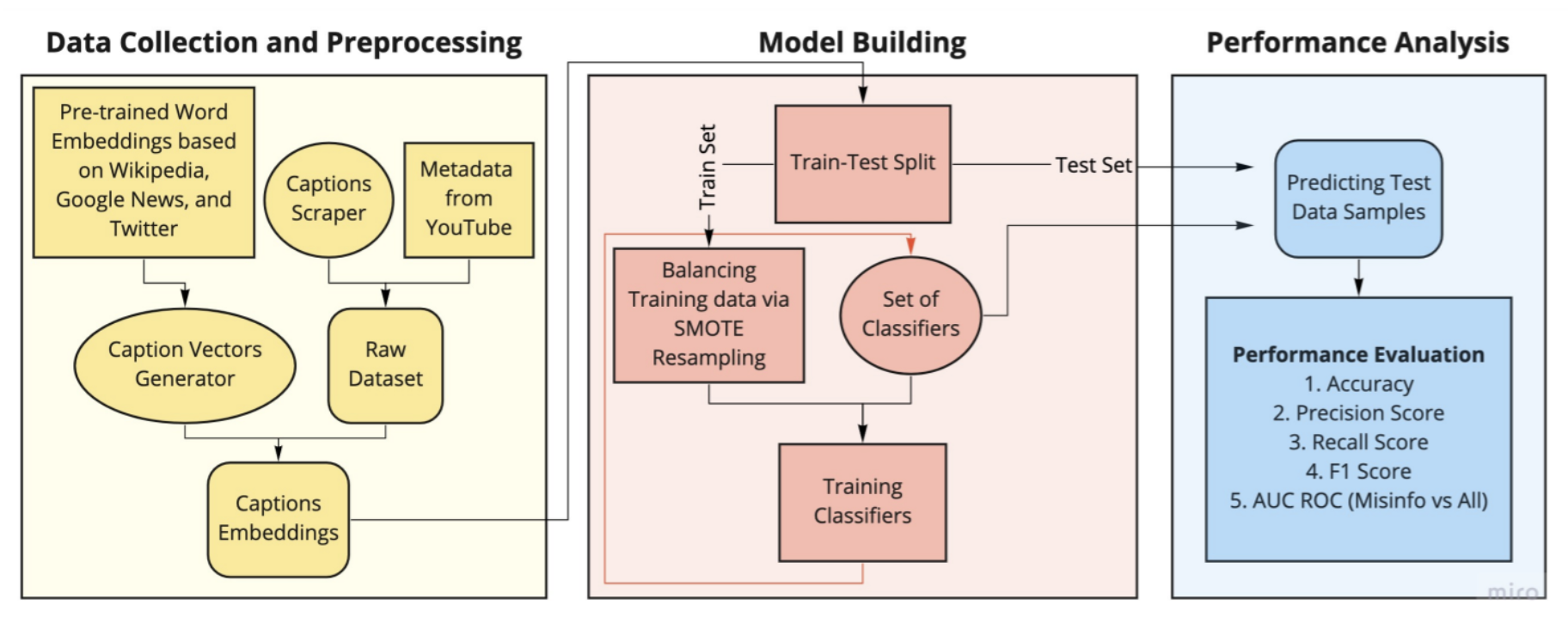

We proposed an approach for detecting videos related to misinformation by exploiting video captions (subtitles). The motivation behind exploring video captions stems from our analysis of videos’ metadata. Attributes such as the number of views, likes, dislikes, and comments are ineffective as videos are hard to differentiate using this information. In addition, the titles and descriptions may mislead in some cases. For example, videos with titles Question & Answer at The 2018 Sydney Vaccination Conference, and The Leon Show - Vaccines and our Children do not indicate any misinformation content, although the videos indeed communicate misinformation. Precisely, the former video conveys that good proteins and vitamins help one live a healthier life than vaccines, and most of the immunity is in the gut that vaccines can destroy.

In the latter video, a physician describes some information scientifically; however, in between, the person indicates autism rates have skyrocketed after vaccines came into existence. To evaluate our approach, we utilize a publicly accessible and labeled dataset consisting of five different topics, namely, (1) the Vaccines Controversy, (2) the 9/11 Conspiracy, (3) Chem-trail Conspiracy, (4)Moon Landing Conspiracy, and (5) Flat Earth Theory.

We build prediction models for classifying videos using various Natural Language Processing (NLP) approaches into three classes, namely i) Misinformation, ii) Debunking Misinformation, and iii) Neutral. In our experiments, the proposed models could classify videos with 0.92 to 0.95 F1-score and 0.78 to 0.90 AUC ROC, showing the effectiveness of our approach.

Read the full article:

https://arxiv.org/pdf/2107.00941.pdf