GLocalX

Artificial Intelligence (AI) has come to prominence as one of the major components of our society, with applications in most aspects of our lives. In this field, complex and highly nonlinear machine learning models such as ensemble models, deep neural networks, and Support Vector Machines have consistently shown remarkable accuracy in solving complex tasks. Yet, they are as unintelligible as they are complex, and relying on them raises significant concerns about their transparency.

Explainable AI (XAI) tackles this problem by providing human-understandable explanations of these black-box model decisions in one of two forms: a local one, in which a rationale for a single black box decision is provided, and a global one, in which a set of rationales for any black-box decision are provided. The former is precise, to the point, multi-faceted, and often provides recourse to the decision, that is, a clear way for the user of the system to actively change the decision. On the other hand, the latter is general, less precise, and is often inaccessible to the user demanding explanations or to the auditor who wishes to peer into the black box.

In Meaningful explanations of Black Box AI decision systems, we introduced Local to Global explainability as the problem of inferring global, general explanations of a black box model from a set of local and specific ones [1]. Here we present GLocalX [2], a model agnostic local to global explainability algorithm that creates decision rules.

GLocalX relies on three assumptions:

1. logical explainability, that is, explanations are best provided in a logical form that can be reasoned upon;

2. local explainability, that is, regardless of the complexity of the decision boundary of the black box, locally it can be accurately approximated by an explanation;

3. composability, that is, we can compose local explanations by leveraging their logical form.

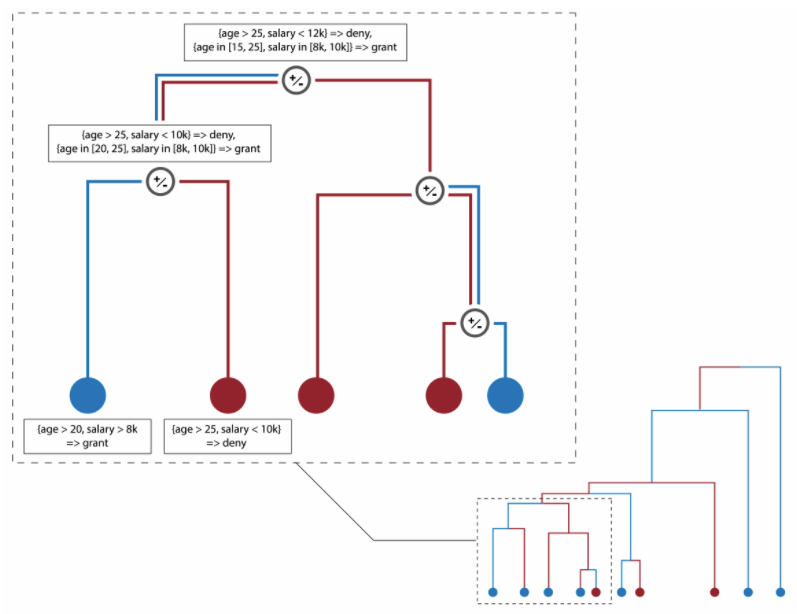

Starting from a set of local explanations in the form of decision rules constituting the structure's leaves, GLocalX iteratively merges explanations in a bottom-up fashion to create a hierarchical merge structure that yields global explanations on its top layer.

![]()

In order to merge, we need to answer two questions: what explanations do we merge, and how do we merge them? We answer the former with a coverage-based similarity function, and the latter with an approximate logical union. The similarity is given by a Jaccard similarity on data coverage: the larger the shared set of explained instances is between two explanations, the more similar they are. Approximate logical union is computed by approximate polyhedral union by either generalizing similar rules with the same predictions or specializing similar rules with different predictions. These two operations work jointly to create global rules without overly generalizing them and losing their predictive power.

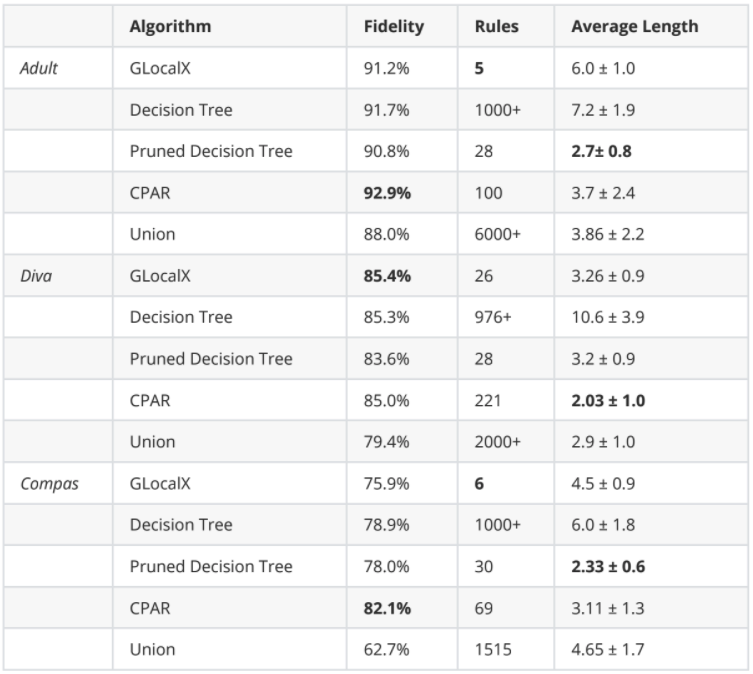

The results of this Local to Global approach are shown in the following table on a set of benchmark datasets (Adult and Compas) and a real-world dataset on fraud detection, Diva:

While the most faithful model in miming the black box changes from dataset to dataset, GLocalX shows a balanced performance, preserving a comparable fidelity at a competitive complexity, here shown in terms of the number of rules and length.

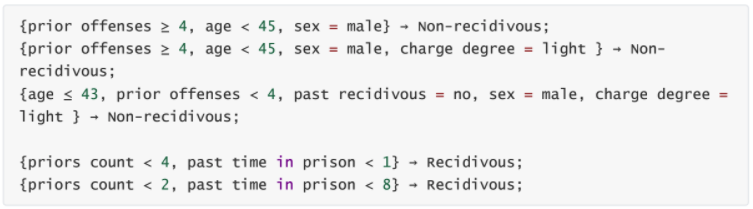

Here we show rules extracted from a deep neural network trained on Compas, a recidivism prediction dataset known for its bias against African-American defendants [3]:

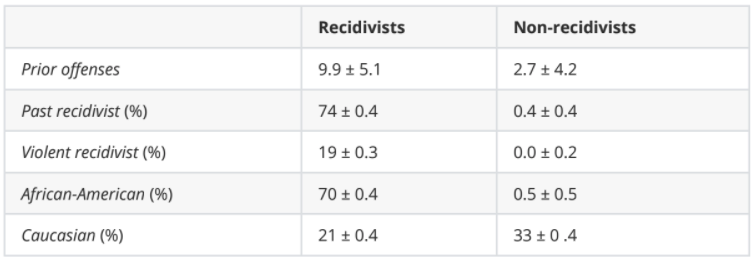

Starting from 1k+ decision rules, GLocalX learned 5 rules, subsuming them and achieving comparable performance with other models. These rules highlight how the model focuses on simple and trivial heuristics for predicting recidivist behavior while singling out outliers for the non-recidivist one. When looking at other features of defendants from either group explained by these rules we note a different trend:

While completely ignoring the race of the defendants, the rules have been able to find a proxy for them, with the recidivists rules targeting African-Americans in a whopping average 70% of cases and Caucasians only in 21% of cases. Another proxy found by the rules is the percentage of past recidivists, with the two sets of explanations targeting two different sets of defendants, with an average 74% past recidivism rate for the recidivists and 0.4% for the non-recidivists.

Author: Mattia Setzu

References

[1] Dino Pedreschi et al. “Meaningful explanations of Black Box AI decision systems”. In Proceedings of the AAAI Conference on Artificial Intelligence. Vol. 33. 01.2019, pp. 9780–9784

[2] Mattia Setzu et al. “GLocalX - From Local to Global Explanations of Black Box AI Models". In Artificial Intelligence 294 (May 2021), p.103457.ISSN: 0004-3702. DOI: 10.1016/ j.artint.2021.103457 . URL: http://dx.doi.org/10.1016/j.artint.2021.103457.

[3] Jeff Larson et al. How We Analyzed the COMPAS Recidivism Algorithm