Understanding Any Time Series Classifier with a Subsequence-based Explainer

In recent years the wide availability of data stored in the form of time series contributed to the diffusion of extremely accurate time series classifiers employed in high-stakes decision making. Unfortunately, the best time series classifiers are usually black-boxes, and therefore quite hard to understand from a human standpoint. This fact slowed down the usage of these models in critical domains, where the explanation aspect is crucial for a transparent interaction between the human expert and the AI system.

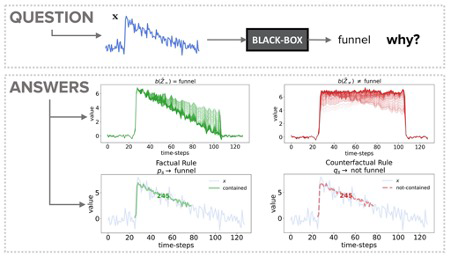

We tackled the problem of explaining time series black-box classifiers in [1] (http://www.sobigdata.eu/blog/explaining-any-time-series-classifier), by building LASTS, a Local Agnostic Subsequence-based Time Series explainer, whose objective is to return an explanation that is easily understandable from a human standpoint. The explanation of LASTS is composed of two parts. The first part is more intuitive and it is returned in the form of exemplar and counterexemplar instances showing how the prediction of the black-box changes depending on the shape of the time series. The second part of the explanation is rule-based and contains factual and counterfactual rules that explain the decision of the black-box in terms of logical conditions and time series subsequences. An example of the explanation for an instance of the dataset Cylinder-Bell-Funnel can be seen in Fig. 1.

Figure 1. An example of the explanation returned by LASTS for an instance of the dataset Cylinder-Bell-Funnel. The instance x is correctly classified by the black-box as funnel. The first row of the explanation is made of exemplars (green) and counterexemplars (red), the second row shows the subsequence-based factual and counterfactual rules. In this case the rules state that “if the subsequence 245 is contained in the time series x then the class is funnel, else the class is not funnel”.

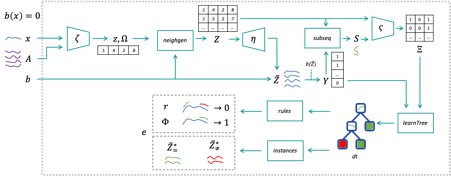

LASTS is a modular framework and its main parts are: an autoencoder, a neighborhood generation algorithm and an interpretable surrogate. In simple terms, the autoencoder first encodes the time series to explain into a simpler, latent, representation. Then, in this latent space, an algorithm generates a neighborhood of new instances similar to the input instance. These instances can be both exemplars and counterexemplars. The generated neighborhood is then decoded and used to train an interpretable subsequence-based decision tree that imitates the decision of the black-box, explaining its prediction in terms of subsequences that must, and must not, be contained in the time series. A detailed schema of the framework is depicted in Fig. 2.

Figure 2. A detailed diagram of the building blocks of LASTS (updated version).

In a recent work, currently in review, we build upon this framework by improving its main building blocks. Specifically, this newer version of LASTS uses a variational autoencoder (VAE)[2], that ensures that the compressed time series in the latent space are normally distributed. This property greatly simplifies the neighborhood generation allowing the testing and usage of simpler and faster algorithms. Moreover, in the updated approach, the neighborhood is sampled directly around the decision boundary, ensuring a balanced amount of exemplars and counterexemplars. Finally, for the surrogate part, while maintaining the decision tree, the older shapelet-based subsequence extraction is compared with a SAX-based one [3].

We benchmarked this new approach on 4 datasets and 2 black-boxes, testing SAX-based and shapelet-based subsequence extractions and 4 new neighborhood generation approaches. The results are very promising: the new version of LASTS is one order of magnitude faster than the older one while maintaining all its qualitative property. Moreover, the explanations returned by LASTS are faithful, meaningful, stable and compare well with SHAP [4], one of the most adopted agnostic approaches.

Author: Francesco Spinnato

Exploratory: Social Impact of AI and Explainable Machine Learning

REFERENCES

[1] R. Guidotti, Anna Monreale, F. Spinnato, D. Pedreschi and F. Giannotti, 2020. Explaining Any Time Series Classifier. IEEE Second International Conference on Cognitive Machine Intelligence (CogMI), 2020.

[2] D. P. Kingma, et al., Auto-encoding variational bayes, in: ICLR, 2014.

[3] L. Nguyen, et al., Interpretable time series classification using linear models and multi-

resolution multi-domain symbolic representations, DAMI 33 (4) (2019) 1183–1222.

[4] S. Lundberg and S.-I. Lee. A unified approach to interpreting model predictions, 2017.