I took the opportunity of the SoBigData++ Transnational Access to develop a collaboration with the CEU research unit directed by János Kertész in Vienna (Austria) on the program titled “Analysis of opinion dynamics over a realistic dynamic social network”. I was hosted for three weeks in the Department of Network and Data Science and worked closely with the unit. We ended up with very interesting results that we plan to summarize in a conference paper shortly.

This article delves into Continual Learning (CL) and its intersection with Explainable AI (XAI). It introduces novel metrics to assess how explanations change as neural networks adapt to evolving data. Unlike traditional CL approaches, this study focuses on understanding the factors influencing explanation variations, such as data domain, model architecture, and CL strategy. Through the lens of SHapley Additive exPlanations (SHAP), the research benchmarks these factors across datasets and models, shedding light on explanation dynamics in CL scenarios.

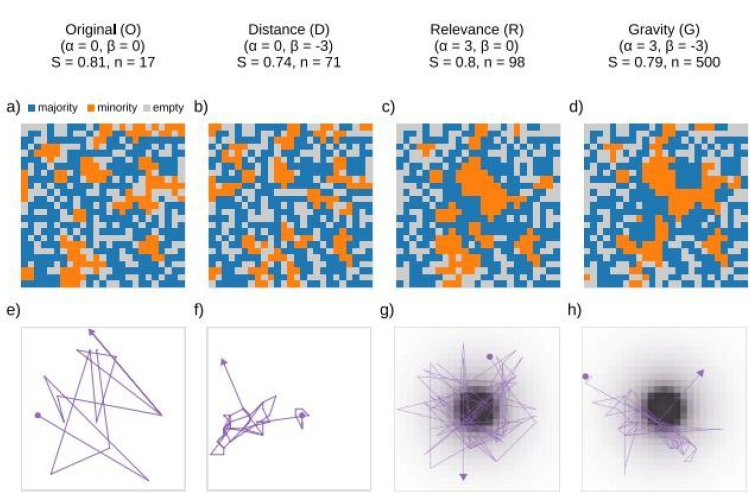

A study conducted by the Institute of Information Science and Technologies (ISTI) of the National Research Council (CNR) has revealed new mechanisms for the formation of urban segregation through the analysis of human mobility within agent-based models. This research, which represents an important evolution of the models introduced by Nobel laureate in economics Thomas Schelling in 1971, offers a deeper perspective on the complexity of social and urban phenomena.

Segregation of people in urban contexts, i.e., the trend that people have to aggregate or separate into groups according to the presence or the lack of similar people, is a long-studied phenomenon. In fact, understanding urban segregation is crucial for policymakers to prevent negative social consequences such as limited access to quality education, healthcare, and employment opportunities.

Cryptocurrencies have the potential to revolutionize the world of finance, providing individuals with more freedom and trustless services. However, this new paradigm also comes with high risks for inexperienced users.

From a TNA experience.

Author: Shakshi Sharma, Host Organization: University of Sheffield, UK. Mu University: University of Tartu, Estonia.

Explainable AI (XAI) has gained popularity in recent years, with new theoretical approaches and libraries providing computationally efficient explanation algorithms being proposed on a daily basis. Given the increasing number of algorithms, as well as the lack of standardized evaluation metrics, it is difficult to assess the quality of explanation methods quantitatively. This work proposes a benchmark for explanation methods, focusing on post-hoc methods that generate local explanations for images and tabular data.

By Simona Re (ELI), Angelo Facchini (IMT), Daniele Fadda (CNR-ISTI)

In this era of global climate and ecological crisis, rethinking our relationship with nature and developing a sustainable and innovative management of natural resources can play a critical role in ensuring both nature conservation and the well-being of citizens.

Geolet simplifies complex mobility data and outperforms black-box models in terms of accuracy while being much faster. This innovation allows for more informed decisions in various domains like traffic management and disease control, thanks to its improved interpretability.